2020 AFPM Summit: Improving process modeling with benchmarking data

Improving process modeling with benchmarking data

ADRIENNE BLUME, Executive Editor, Hydrocarbon Processing

At AFPM's virtual Summit on Wednesday, a morning session on monitoring and improving equipment operations featured Garry Jacobs, Technical Director at Fluor Corp. and Jesus Cabrera, Process Director at Fluor Corp. The speakers discussed the use of readily available benchmarking data to improve process modeling and decision-making.

The speakers shared three examples of performance benchmarking for a vacuum column, a reformate splitter and parallel exchanger trains.

Example 1: "Lost in the mist." In the first example, an analysis of a vacuum column resulted in difficulty in reconciling plant and laboratory data. All data appeared reasonable upon first inspection, but after a data overlay was performed it became evident that the column performance was being restricted by entrainment.

After a simulation adjustment, Fluor advised rerouting a portion of the LGVO pump flow, as around 35% of the LVGO was found to be entrained in the MGVO section. The case demonstrated the criticality of evaluating plant data, checking for consistency and the development of plausible explanations for problems.

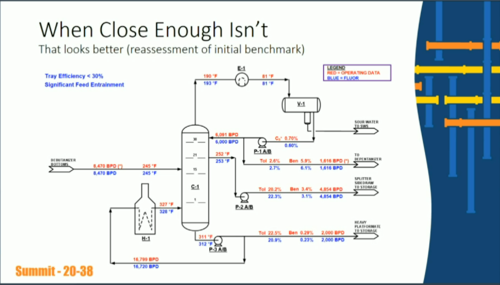

Example 2: When close enough isn't. In the second benchmarking example, an initial benchmarking effort by "Contractor A" at a reformate splitter in 2011 arrived at a simulation column efficiency of 70% and a tray efficiency of 50%–70%. Differences were discovered between plant data and simulation data. Deviations in excess of 80%—and up to 99%—suggested a problem with the simulation model, which had predicted a better separation efficiency than what was observed. Actual tray efficiency was poor, compared with the simulation tray efficiency of 70%.

Fluor reassessed the initial benchmark, and an improved model was created (FIG. 1). Fluor ensured the new model's robustness by examining if the conclusions were reproducible on a different data set. Lessons learned included the need to verify results in the field and avoid making assumptions. "This case reflects how important the time for benchmarking is to the project timeline," Jesus Cabrera noted.

FIG. 1. An improved benchmarking model.

Example 2: Doing the splits. In the third example, a new train of exchangers was installed next to an existing train in a hydroprocessing unit. However, the necessary hydraulic balance between the two trains was not met, and the pressure of the stream coming from one tray to the other was found to be out of balance. A hydrodynamic map of the operation was built to determine the cause of the hydraulic imbalance.

Fluor proved that the new train was at a lower level than the existing train, refuting the original licensor's theory. To solve the problem, Fluor proposed changes to the process and to the reactor piping to improve thermal distribution. In this case, the use of more than one modeling tool (including CFD analysis) helped disprove potential explanations for problems.

In conclusion, Garry Jacobs noted that the three process modeling and benchmarking studies proved that a critical evaluations of plant data are needed to avoid poor equipment performance by pinpointing deficiencies that can change equipment functionality, feedstock flow and other parameters. Sometimes, operating data cannot be adequately explained and instrument calibration may be needed.

"Good engineering judgement is very important for technical risk mitigation," Jacobs said.

Comments