2021 AFPM Summit Virtual Edition: Five best practices for digital transformation initiatives in oil and gas

CRAIG HARCLERODE, Global O&G and Industrial Chemicals Industry Principal, OSIsoft

Following these best practices will significantly improve the success probability of digital transformation initiatives including the Industrial Internet of Things (IIoT), a portfolio of analytics involving “Big Data” and improved OT-IT integration.

Digital transformation (DX) is a grand term encompassing many types of data sources, connections and analytics in support of cultural and work process redesign. DX initiatives, sometimes referred to as “Big Data,” are widely claimed to provide transformative business value. How that value is practically delivered, however, does not follow a strict formula or set of rules. At the very least, this makes the whole DX concept confusing, while the worst case occurs when organizations invest effort pursuing a DX initiative but don’t realize anticipated benefits.

Industrial and commercial end users are well-positioned to take advantage of DX. They already have significant intelligent assets in service, with the required business computing and networking resources usually available. Their subject matter experts (SMEs) possess deep process knowledge and are poised to do even more with the right data at their fingertips. However, there are certainly challenges concerning cybersecurity, the fine details of how to gather so many small data sources into a useful whole, and what to do with the data once it is obtained.

DX adopters usually need to modify or upgrade their legacy operations technology (OT) systems, which are typically focused more on command and control and less on data gathering. They also must incorporate more modern IIoT devices located out at the edge of their operations by connecting these devices to OT systems. Finally, they must channel this data up to existing enterprise information technology (IT) systems. At a pragmatic level, this calls for a team with many skillsets to wrangle these similar but different systems, along with a higher-level vision of how to create useful results around the traditional operational excellence dimensions of people, processes and technology.

Many companies have realized positive DX experiences. Learning from these successes, there are five leading best practices for successfully “going digital”:

- Focus on supporting business strategy and business value led by OT, not technology led by IT

- Create smart asset templates owned and configured by the SMEs in an evolutionary approach

- Adopt a hybrid OT-IT data lake strategy with fit-for-purpose technology supporting both segments

- Define an analytics framework and adopt a “layers of analytics” approach

- Use an OT data model akin to the financial chart of accounts for bridging OT data to IT systems.

At some point, an organization attempting DX must jump in, but they can certainly follow a relatively conservative approach emphasizing practicality and financial viability. This white paper examines these five best practices for implementing Big Data projects with this approach in mind.

Why implement DX? The answer to the question of why any company should implement DX is not about incorporating new technology for the sake of being cutting-edge. Rather, the DX endgame is simply about delivering value by evolving the culture and work processes with digital technology. When properly adopted, these new digital technologies enable proactive and visionary companies to transform themselves in several ways:

- Empowering their people with access to real-time operational intelligence

- Redesigning their work processes to be digitally enabled

- Leveraging and integrating a range of digital technologies.

DX helps companies become more intelligent because it empowers their people—and in some instances their customers—by giving them access to contextualized and normalized operational data and information, also known as operational intelligence. Site operations personnel use performance dashboards to make plants run better day-to-day. This is done by making proactive, exception-based decisions, resulting in higher value through improved efficiency and a reduction in operations and maintenance costs (O&M) and lost opportunity costs. When end users can access customer dashboards, they find this better visibility allows them to improve business relationships and increase the return from the “digital value chain.”

Redesigning work processes to be digitally enabled grants access to previously unavailable data, automatically with little or no delay and exceptional accuracy. Even at a very basic level, DX projects reduce operators rounds, increase situational awareness and add the ability to both prevent and respond to abnormal operations and events. These improvements all promote safety and operational performance.

Digital technologies are not only about collecting and presenting the data; they also include what is done with it. A range of analytical methods may include human or software processing to develop prescriptive and predictive ways for helping facilities proactively maintain their equipment and optimize their processes to improve product quality and throughput.

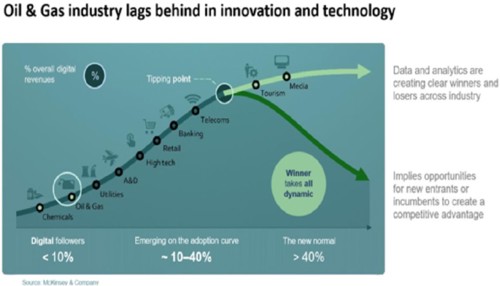

Many oil and gas (O&G) industry companies already incorporate significant technology for operational supervisory control and data acquisition (SCADA) systems and distributed control systems (DCSs). However, with respect to data and analytics innovation, they lag behind nimbler high-technology industries (FIG. 1). This means there are many opportunities within O&G to augment traditional SCADA and DCS platforms with DX projects to create competitive advantage.

FIG. 1. The oil and gas industries are relative laggards with respect to data and analytics innovation. (Reference: OSIsoft PI World 2019, DCP Midstream Keynote, slide 5)

When operational intelligence is combined with business intelligence to provide complete enterprise intelligence, organizations can realize operational excellence in the form of maximized value.

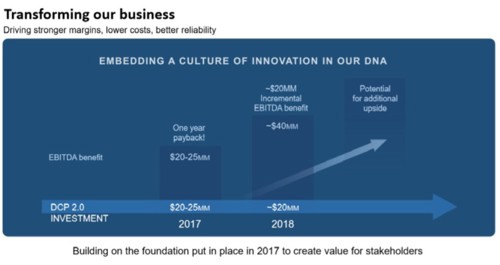

Driven by business needs. DX is the path to enterprise intelligence. However, not all organizations have been completely successful with efforts to map out this path. Companies find best success when they remember that DX is ultimately about delivering business value, and not about exploring exploring and experimenting with technology (FIG. 2). Following are some approaches common to smart businesses where DX initiatives have resulted in successful implementations:

- Follow the “4S” approach—start small, simple and strategic

- Aligned business and digital strategies

- Build a high-level business case supported by key performance indicators (KPIs)

- Obtain end user and IT/OT input and support

- Guide the work with a “4M” strategy—“make me more money.”

FIG. 2. DXs are most successful when they create stakeholder value, measurable by KPIs. (Reference: OSIsoft PI World 2019, DCP Midstream Keynote, slide 14)

Any DX initiative should not be a grand all-or-nothing commitment, but rather a “4S” approach. Until a company has learned what works, the best advice is to start small, simple and strategic; however, the key is to start. Companies should choose a relatively bounded operational area, which is likely to yield a positive result. Examples are increasing the run time of heat exchangers before fouling, or comparing several installations of a given asset type to look for outliers.

This helps ensure digital strategies are applied appropriate to the case, with business value demonstrated. To prove the success of the business case, it will be necessary to identify KPIs to measure and document before and after conditions. In a sense, this is using data to show the value of the data.

From a soft-skills standpoint, DX project teams should cultivate end user, IT and OT support. This may be easier said than done. However, if each of these parties can contribute to the work in their area of strength, success is more likely. When in doubt at any point, fall back to a simple “4M” strategy for guiding the work.

Smart asset templates make sense of data. The degree to which smart devices have become intelligent is exponential rather than linear. Traditional devices provided only one or two process variables—or sometimes none—due to lack of connectivity; once a device is networkable, it typically supplies dozens of diagnostic, operational and other types of data points. An abundance of data from so many assets can be overwhelming for fledgling DX efforts.

There are proven ways to bring order to this type of chaos and provide a structure for curating the most important data, helping DX projects to be successful initially and during scale-up. Useful steps for making sense of the data as a DX initiative begins and proceeds include:

- Use a technology that can abstract and normalize tagging and asset names, units of measure, and time zones to create a company lexicon

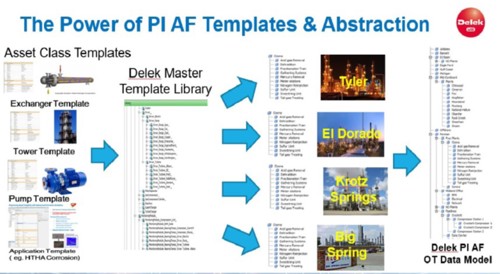

- Evolve configurable smart asset templates ( 3) owned by the SMEs

- Structure these templates to enable rollout at scale

- Start simple and evolve the asset template over time

- Implement smart object models that make sense at both OT and IT levels.

FIG. 3. Smart asset templates should be evolved and owned by SMEs, and they should define an OT data model that can be deployed and maintained at scale. (Delek US Use of Smart Asset Templates = Reference PI World 2018)

Creating and evolving smart asset templates is the most basic strategy for effectively handling massive amounts of data. Much like object-oriented programming as an approach to software development, smart asset templates arranged in a dynamic hierarchical library structure are the best way to identify, organize and access the massive quantity of digitally available data streams.

Each basic asset class, like a pump, requires a generic structure with pertinent data. For a pump, this might include sensor values while running, such as vibration, amperage, pressures and temperatures. This information can be augmented with calculations and totalizations for data (such as run times) and events (such as a failure).

There also can be analytics derived from formulaic or empirical data (e.g., performance curves) to enable predictive analytics and a design vs. actual comparison.

Implementers may naturally feel a need to develop an overarching scheme to handle all situations, which is admirable. But this type of approach can hinder initial progress, so implementers need to understand that smart asset templates are progressed over time and improved as best practices are identified. Therefore, it is best to start small with a few asset classes and find what works, then evolve them over time in a continuous improvement cycle.

Of course, applying some foresight is desirable. Template structures should be suitable for enabling rollout and maintenance at pace and scale. If there are many types of pumps, perhaps there is a “parent” pump template defining the most common data points of interest and associated “children” pump templates with added type-specific values. Geography plays an important role when considering a fleet of assets in service within different plant areas, or even across multiple sites, so any template library should include provisions to identify process and physical locations.

One final thought is that these smart object models must make sense for all users, especially at both the OT and IT levels. All stakeholders must be able to obtain and handle the DX data important to them in ways consistent with how they work.

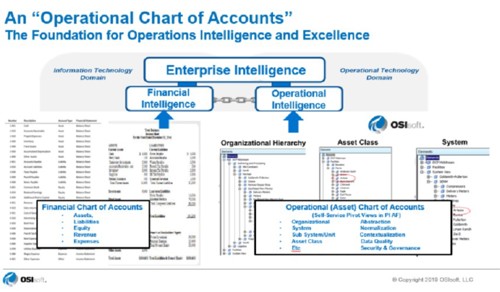

Hybrid data lake strategy—An IT and OT chart of accounts. Many early DX implementers have embarked with a sense that all data would simply end up pouring into a massive “data lake” in the cloud. However, this unorganized approach is flawed and more likely to result in a “data swamp” where users get bogged down and become unable to progress. Just as smart asset templates are used to organize incoming data streams, an organizational method with normalization and contextualization is required to define how data is stored.

Financial accounting practices commonly employ a “chart of accounts” concept to identify and navigate all financial assets owned by an organization. Thinking of data as an asset leads to the realization that all data storage locations can be considered as accounts, and therefore all available repositories can be handled through an “operational chart of accounts” (FIG. 4). This hybrid approach brings organization to the data lake and is adaptable to storing data in both on-premises or cloud-based locations, as well as any form of database, data warehouse or other archive type. A hybrid data lake strategy helps empower flexible data access for successful DX projects.

FIG. 4. An OT “chart of accounts” approach brings context and normalization to the chaos of multiple data types often found in a portfolio of assets. (Hybrid Data Lake Strategy and Creation of an “OT Chart of Accounts”, Reference 9th Annual OpEx Summit, Houston, Texas, November 7, 2018 “Going Digital in OpEx - From Tired to Wired” by author

A hybrid strategy is necessary due to the irregular data sources involved. DX transformations must handle data types that are tabular, unstructured, event-based, linear-/time-based and may be in an asset-context. Data may arrive constantly, or be deferred and delivered in batches with timestamps. Most industrial processes generate time-series data that has some unique challenges, but associating metadata with it brings context to the chaos.

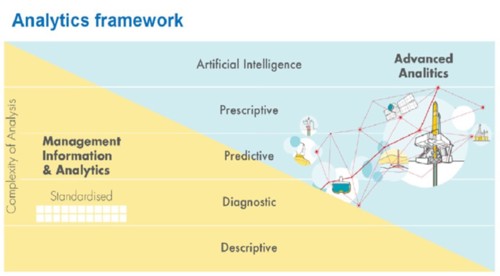

Analytics at any layer. Once the data gathering, organizing and storage approaches are in place, the next step becomes how to effectively analyze this data and obtain useful results. Turning operational data into intelligence requires a range of analytical approaches. Successful DX implementers have found that a framework built by adopting a “layers of analytics” approach is most effective (FIG. 5).

FIG. 5. Defining and leveraging an analytical framework with progressively more advanced layers is the best approach for turning operational data into intelligence. (Analytics distribution: yellow in the OT data lake and blue in the IT data lake) Layers of Analytics – Reference EMEA 2018 PI World Presentation: Shell’s Journey to Advanced Analytics)

Results can be descriptive, diagnostic, predictive and prescriptive. The smart asset objects should form the foundation of this “layers of analytics” up through a portion of the predictive analytics via formulaic or empirical based methods. Higher level analytics such as machine learning (ML) and artificial intelligence (AI) should address defined business cases.

The sheer variability of techniques resists being forced into a limited number of standardized approaches. DX implementers must be ready to rationalize each analytics action to perform as desired, while being flexible enough to apply each action at the level where it makes the most sense. This approach often must be adjusted over time based on operational experience.

For instance, analytical processing power was originally only available at the highest levels of computing infrastructure. However, increasingly powerful edge devices are enabling a shift to perform analytics or at least pre-processing of data much closer field sources, which minimizes upstream computing, storage and bandwidth demands.

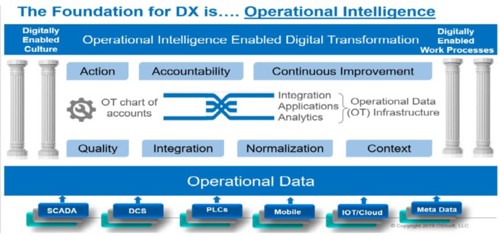

Operational intelligence enables DX. The preceding sections have discussed how DX data travels and is processed throughout a range of OT and IT systems. DX implementers realize that OT and IT are intimately related, yet have very different data needs.

OT is real-time, generated from critical operations that require security, context, normalization, and must be immediately actionable (FIG. 6). IT requires access to quality and performance values, generally averaged operational data in context to integrate with transactional and other types of data.

FIG. 6. The foundation of DX is operational intelligence enabled by an operational data infrastructure. The elements of traditional operational excellence (OpEx) include people, process and technology and the associated continuous improvement—all key parts of operational intelligence and DX. (Reference: 9th Annual OpEx Summit, Houston, Texas, November 7, 2018, “Going Digital in OpEx - From Tired to Wired” by the author)

The most successful implementors of DX initiatives have learned to effectively bridge OT to IT through a comprehensive critical operations data infrastructure akin to the financial chart of accounts that normalizes currencies, units of measure, and categories, providing the ability for users to pivot into different financial views. The operational data infrastructure enables the following activities:

- Pervasive integration to a wide range of operational data and information systems

- Configured applications and solutions, such as advanced condition-based maintenance

- A foundation for a “layers of analytics” strategy.

Many view this critical operations data model as an “OT data lake” that augments and complements the more traditional, cloud-based “IT data lake” implemented in a hybrid data lake architecture.

Providing more details, the OT data model is the best location to perform foundational calculations, descriptive analytics, and some predictive analytics that are based on first principal equations or empirical correlations. The results of these calculations, totals and analytics can be historized with the sensory data and then served up to higher level analytics such as advanced pattern recognition (APR), AI and ML.

This can be accelerated by creating a self-service, configurable integration layer to enable end users, not IT, to cleanse, augment, shape and transmit (CAST) OT data so it is better consumed by the unstructured IT systems. CAST elements can be located anywhere, but the closer to the OT edge the better.

In fact, the preceding best practices of enabling the SMEs to configure and evolve smart asset templates, adopting a hybrid data lake strategy and embracing a layered analytics approach are all conducive to seamlessly bridging OT and IT systems through a consistent effort of robust data modelling. Even as users start small, they should always be looking for opportunities to facilitate integration.

Many organizations are looking at how they can benefit from DX projects, but are not sure how to begin because there are so many variables and options spanning large numbers of assets, geographical locations and technologies. The potential value is visible, but there is not a clear one-size-fits-all method of determining the path to success.

This article has presented five pragmatic and proven best practices based on companies delivering transformative business value and supporting their 21st century business strategies. A constant focus on business value and leadership from OT business owners, supported by IT, is paramount. Smart asset templates organize the vast amounts of raw data, turning disparate critical operational data and meta data into self-serve actionable operational intelligence. A fit-for-purpose hybrid data lake strategy stores the data where it makes the most sense, while an “OT chart of accounts” identifies aggregated data from across a portfolio of assets. Analytics can then be applied at the system level where they are a best fit.

Finally, an overarching approach of leveraging IIoT and OT infrastructure as the foundation for building bridges to IT will enable raw data to be digitally transformed into operational intelligence. Performing these steps will ensure successful DX implementation in a timely manner with cost, budget and schedule certainty.

ABOUT THE AUTHOR

Craig Harclerode is a Global O&G and Industrial Chemicals Industry Principal at OSIsoft, where he consults with companies on how the PI system can add value to their organizations as a strategic OT real-time integration, applications and analytics infrastructure. Mr. Harclerode is focused on digital-enabled business transformation to address the dimensions of people/culture and process reengineering by leveraging the PI system in the areas of IIOT/edge, PI AF digital twins, layers of analytics and “Big Data” to deliver transformative business value.

His 38-year career has included engineering, operations and automation in supervisory, executive management and consultative roles at Amoco Oil/Chemicals (16 years–CAO) and solutions providers Honeywell (6 years–VP SW Ops) and AspenTech (6 years– Director, OpEx).

He holds a BS degree in chemical engineering from Texas A&M University, an MBA from Rice University and is a former certified Project Management Professional (PMP). Mr. Harclerode has produced numerous publications and is a regular thought leader presenter at conferences and events globally on the subject of “going digital.”

Comments